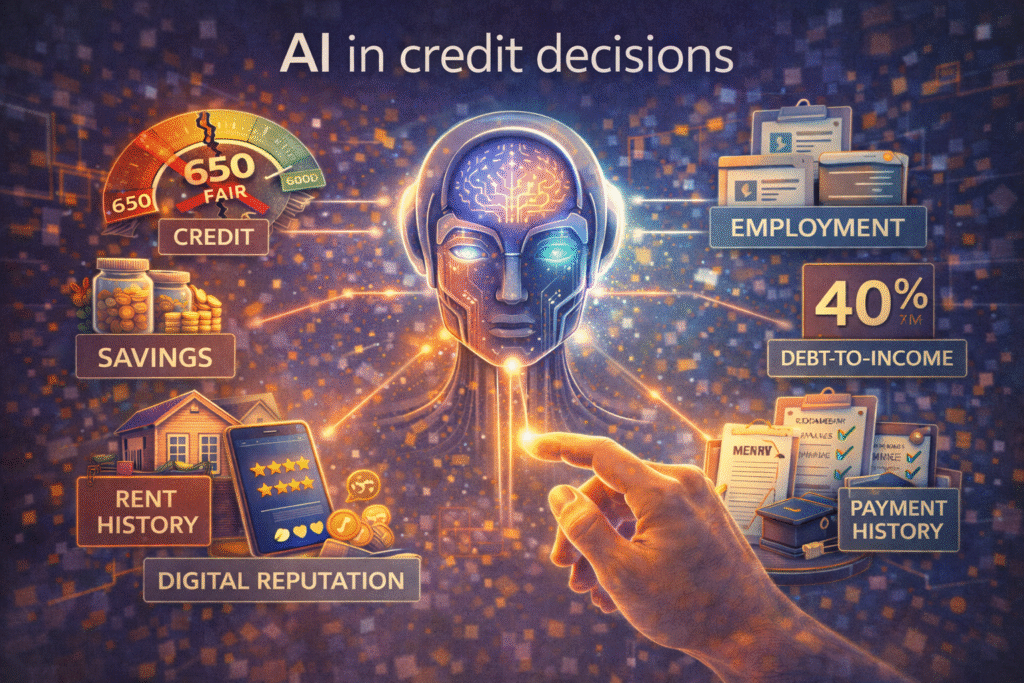

Your credit score gets checked in milliseconds now. AI in credit decisions allows algorithms to review hundreds of data points, cross-reference multiple databases, and determine whether you qualify for a mortgage or car loan before you finish the application. It’s efficient—but when an error exists on your credit report, that same speed works against you. A single incorrect late payment doesn’t just sit there anymore; it’s processed, weighted, and multiplied across dozens of risk calculations, potentially triggering denials from multiple lenders before you even know something’s wrong.

The shift toward AI in credit decisions has introduced a new challenge: these systems can’t distinguish between accurate data and mistakes. They process everything with equal confidence and reassess creditworthiness continuously based on whatever information they receive. Understanding how these algorithms function, where errors enter the pipeline, and why traditional dispute methods often fall short is now essential for protecting yourself in a credit environment where machines make the calls.

The Invisible Machinery: How AI Models Digest Your Credit Data

Traditional FICO scores operate on a relatively straightforward premise: they analyze five weighted categories from your credit report—payment history, amounts owed, length of credit history, new credit, and credit mix—to produce a three-digit number. AI in credit decisions works very differently. Instead of relying on a handful of categories, machine learning models simultaneously process hundreds or even thousands of variables pulled from your credit file. Where FICO might see “three credit cards with 30% utilization,” AI in credit decisions examines spending behavior on each card, payment timing relative to statement dates, balance trends over time, and how those behaviors compare to millions of other borrowers. This granular analysis happens in milliseconds, but it also means a single incorrect late payment doesn’t just lower a score—it becomes raw material for dozens of derivative risk calculations.

The data ingestion process behind AI in credit decisions creates exponential opportunities for error propagation that didn’t exist in traditional lending. When you submit an application, automated systems pull data simultaneously from all three credit bureaus—Experian, Equifax, and TransUnion—along with alternative sources such as utility payments, rental records, and bank account activity. If one bureau reports an incorrect late payment while the others don’t, AI in credit decisions does not treat the discrepancy as suspicious. Instead, the algorithm processes all inputs as valid, sometimes weighting the incorrect data more heavily based on historical model training and eliminating the human review step that once caught obvious inconsistencies.

Feature engineering is one of the least understood ways AI in credit decisions amplifies errors. This process transforms raw credit data into hundreds of predictive variables. A single incorrect late payment doesn’t remain isolated—it feeds models measuring payment velocity, account distress spread, recovery likelihood, and seasonal financial stress. Through this transformation, AI in credit decisions can turn one factual error into dozens of correlated risk signals, all derived from information that should never have existed in your file.

Real-time decisioning further intensifies how AI in credit decisions handles inaccurate data. Unlike traditional underwriting, where a human might question suspicious entries, automated systems assume reported information is already verified. Once ingested, unverified data is immediately incorporated into risk calculations, allowing approvals, denials, or pricing changes to occur before you even know an error exists.

How Machine Learning Models Process Erroneous Credit Data

Machine learning models apply the same analytical confidence to inaccurate data as they do to correct information, which is one of the most dangerous flaws in AI in credit decisions. When an algorithm encounters a collection account on your credit report, it doesn’t question whether the debt is fraudulent, misattributed, or already paid. Instead, the system incorporates the data point into its risk calculation, weighting it based on patterns learned from millions of other credit files. Your individual context—years of perfect payments or proof that the account is an error—doesn’t override the statistical assumption that collections signal higher default risk.

The training data problem compounds this weakness in AI in credit decisions. These models learn by analyzing historical credit bureau data, which itself contains documented error rates. When flawed data exists in training sets, the algorithms can mistakenly treat reporting inconsistencies as legitimate risk indicators. Because the model cannot distinguish correlation from causation, borrowers may be penalized simply for resembling historical error patterns, even when their current credit information is accurate.

Identity resolution failures create some of the most severe breakdowns in AI in credit decisions. When credit bureaus misattribute accounts due to similar names, addresses, or partial Social Security numbers, automated underwriting systems absorb the mixed file without detecting the identity conflict. Tradelines belonging to different individuals are processed as a single profile, producing risk assessments based on financial behavior that isn’t yours—something a human reviewer would likely question immediately.

The velocity problem magnifies how AI in credit decisions spreads damage across multiple lenders at once. When you apply for credit, several institutions may pull your report within days, each running proprietary models on the same flawed data. One reporting error doesn’t lead to a single rejection—it can trigger multiple denials in parallel before you even realize the issue exists.

Black-box opacity further complicates challenges created by AI in credit decisions. These systems rely on hundreds of interacting variables, making it difficult to identify which specific data points caused a denial. Adverse action notices provide generic explanations, offering little insight into whether the true trigger was an incorrect collection, a misreported balance, or a mixed-file entry.

Without transparency or context, consumers remain exposed to repeated negative outcomes driven by AI in credit decisions operating on inaccurate or misleading data—often with no clear path to correction.

Why Disputing AI Credit Decisions Differs From Traditional Disputes

Current credit reporting laws were written for a world of human decision-making and struggle to address the realities of AI in credit decisions. The Fair Credit Reporting Act (FCRA), enacted in 1970 and amended over time, grants consumers the right to dispute inaccurate information and receive explanations for adverse actions. However, these protections assume lenders rely on human judgment and can clearly articulate why a loan was denied. In AI in credit decisions, hundreds of variables interact simultaneously, making it impossible to reduce outcomes to a simple list of reasons. This creates a disconnect between your legal right to an explanation and the opaque nature of algorithmic decision-making.

Adverse action notices have become far less useful in the era of AI in credit decisions. Instead of pointing to specific, verifiable credit report entries, notices now often contain abstract summaries generated by models. You may be told you lack “credit history depth” despite having a 15-year file, because AI in credit decisions defines depth using complex calculations involving account diversity, utilization trends, and payment timing. These notices provide no clear guidance on what to dispute, leaving consumers unable to take meaningful corrective action.

The recency problem further exposes the shortcomings of AI in credit decisions. Credit bureaus have up to 30 days—sometimes 45—to investigate disputes, but automated systems continue making decisions using uncorrected data during that period. While a dispute is pending, AI in credit decisions may reduce credit limits, deny applications, or increase interest rates. Even after the error is fixed, these automated consequences remain, because algorithmic actions are not reversed retroactively.

Proprietary lender models compound the issue in AI in credit decisions. Each institution uses its own machine learning systems trained on unique datasets, meaning the same corrected credit report can produce different outcomes across lenders. Fixing an error might raise your FICO score, but AI in credit decisions may still deny you if the model weighted other variables more heavily or relied on interactions between multiple data points rather than the corrected item alone.

The documentation burden for challenging outcomes driven by AI in credit decisions is especially severe. Consumers must prove both that credit report information was inaccurate and that the inaccuracy directly caused the algorithmic denial. While disputing factual errors is possible, proving causation inside a proprietary model is nearly impossible. Without access to the lender’s internal logic, borrowers are effectively denied meaningful recourse, even when errors clearly influenced AI in credit decisions.

Alternative Data Creates New Credit Opportunities And Error Risks

Alternative data integration into AI credit models creates inclusion opportunities for consumers with thin traditional credit files while simultaneously introducing novel error vectors that lack established dispute infrastructure. Utility payments, telecommunications bills, and rental payment histories can help individuals who lack credit cards or loans demonstrate financial responsibility. Machine learning models can analyze these payment patterns to assess creditworthiness for borrowers who would be invisible to traditional scoring systems. However, each alternative data source represents a new reporting entity that may lack the data quality controls and dispute processes that traditional credit furnishers have developed over decades. A utility company might report your account under a slightly different name spelling, creating an identity mismatch. A rental payment service might incorrectly attribute your roommate’s late payment to your record. A telecommunications provider might report an account you closed years ago as currently delinquent. These errors enter AI credit models with the same weight as accurate information, but you often discover them only after denial, with no clear path to correction comparable to the established credit bureau dispute process.

Bank account analysis represents a particularly intrusive form of alternative data that AI models increasingly use to assess income stability and spending patterns. When you authorize a lender to review your bank account transactions—often buried in application fine print—their algorithms scan months of transaction history looking for signals of financial stress or stability. Regular deposits suggest stable income. Consistent bill payments indicate reliability. Overdraft fees signal money management problems. However, this analysis creates vulnerability to bank errors and fraudulent transactions that have nothing to do with your actual financial behavior. A single bank error that temporarily shows your account overdrawn can register as an overdraft in the AI’s analysis. A fraudulent charge that you’re disputing with your bank might appear as overspending in categories the algorithm associates with financial distress. The AI model processes these anomalies as legitimate data points about your financial behavior, and unlike credit report errors that you can dispute through established bureau processes, bank transaction errors often can’t be corrected in the lender’s system even after your bank fixes them.

The verification challenge for alternative data stems from the absence of standardized reporting infrastructure comparable to traditional credit reporting. The three major credit bureaus, despite their flaws, operate under regulatory oversight with established dispute procedures and legal obligations to investigate consumer challenges. Alternative data sources typically lack these structures. If a utility company incorrectly reports your payment history to a data aggregator that feeds AI credit models, you may have no direct dispute rights with that aggregator. The utility company might not even know its data is being used for credit decisions. The lender using the AI model might not disclose which specific alternative data sources influenced its decision. You’re left trying to correct errors in a fragmented ecosystem where no single entity takes responsibility for data accuracy, and your legal rights remain unclear because alternative data reporting largely operates outside the regulatory framework established for traditional credit reporting.

Digital footprint incorporation into some AI credit models introduces data sources that consumers don’t typically associate with creditworthiness and that may be outdated or incorrect. Certain algorithms analyze publicly available information including address history from property records, employment information from professional networking sites, and even educational background from public databases. This data can help AI models assess stability and verify identity, but it also creates risk when the information is wrong. Your LinkedIn profile might show an old employer because you haven’t updated it in years, but an AI model might interpret this as employment instability or dishonesty if it doesn’t match your application. Public records might show an address where you lived briefly during a difficult period, and the algorithm might associate that location with higher default risk based on geographic patterns in its training data. Unlike credit report information, which you can review and dispute, you often don’t know which public data sources an AI model accessed, making it impossible to identify and correct errors before they influence credit decisions.

Consent confusion compounds the alternative data problem because many consumers unknowingly authorize extensive data collection through application fine print, then discover errors only after denial with limited ability to opt out or correct the record. When you apply for credit online, you might click through several screens of disclosures, one of which authorizes the lender to access your bank account transactions, utility payment history, rental records, and other alternative data sources. This consent is typically all-or-nothing—you can’t selectively authorize access to accurate data sources while excluding those you know contain errors. Once you’ve provided consent and the lender’s AI has processed the data, withdrawing consent doesn’t reverse the decision made using that information. You’re left in a position where you authorized access to data you

The New Reality of Algorithmic Credit

The speed that makes AI-driven credit decisions so efficient for lenders has fundamentally altered the balance of power between consumers and the systems that judge their creditworthiness. What once took days and involved human oversight now happens in milliseconds through algorithms that can’t distinguish between accurate data and errors. A single mistake on your credit report doesn’t just lower your score anymore—it cascades through dozens of risk calculations, triggers automated adverse actions across multiple lenders, and perpetuates itself through continuous monitoring systems before you even know something’s wrong. The legal protections established for human-reviewed credit decisions haven’t caught up to this algorithmic reality, leaving you with dispute rights that don’t address the core problem: machines making consequential financial decisions about your life with absolute confidence in data that might be completely wrong. You’re no longer just managing your credit—you’re navigating an automated system that processes errors with the same certainty it applies to facts, and the burden of proving the machine wrong falls entirely on you.