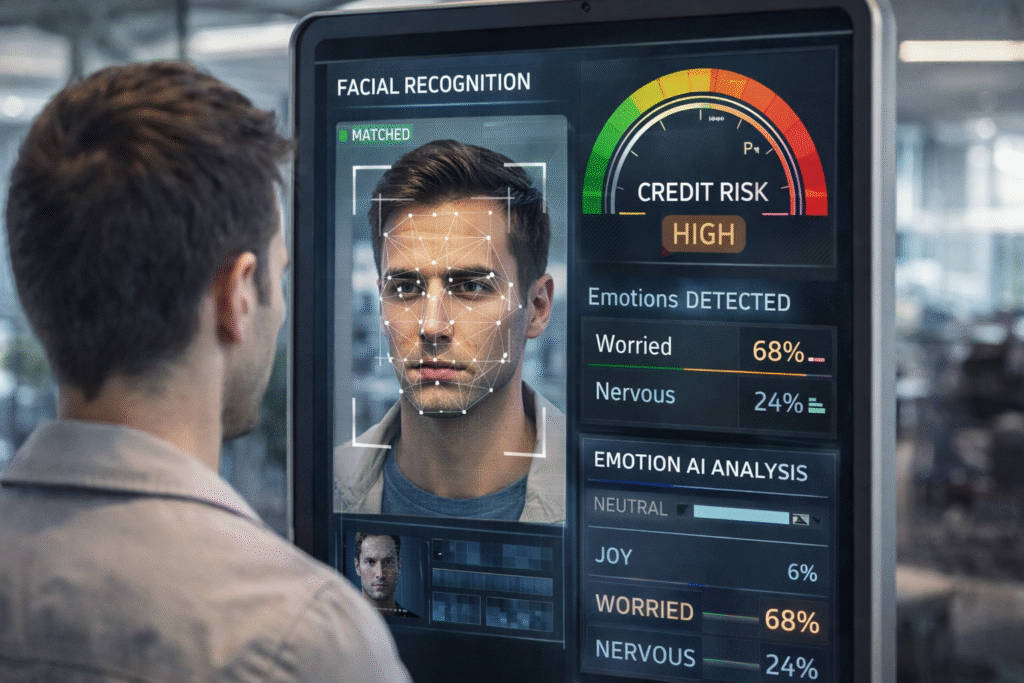

When you apply for credit online, your lender might be watching more than your financial history. New artificial intelligence systems can now analyze your facial expressions, voice patterns, and emotional responses during digital applications to influence facial recognition credit decisions. These technologies promise faster approvals and improved risk assessment, but they also introduce complex new variables into an already complicated credit evaluation process.

For the millions of Americans dealing with credit report errors, facial recognition credit decisions present both opportunities and risks that deserve careful attention. While emotion AI could help lenders look beyond damaged credit scores to assess broader behavioral signals, it may also amplify bias or create new obstacles based on appearance, tone, or emotional response during a high-pressure application—making preparation and awareness essential.

The Hidden Data Points: What Facial Recognition and Emotion AI Actually Measure in Financial Contexts

Facial recognition systems in credit evaluation now play a growing role in facial recognition credit decisions, capturing far more than basic identity verification. These sophisticated algorithms analyze micro-expressions lasting mere milliseconds, tracking subtle changes in facial muscle tension that most humans cannot consciously detect. During a typical online credit application, the technology monitors eye movement patterns, blink rates, and pupil dilation to assess stress levels and perceived truthfulness, all feeding into facial recognition credit decisions. Voice analysis components simultaneously evaluate vocal tremor, speech pace variations, and tonal shifts that may indicate anxiety or deception.

The granular nature of this data collection extends beyond conscious behavioral cues. Modern emotion AI systems can detect minute changes in skin coloration that indicate blood flow variations, often interpreted as signs of emotional arousal or stress. These biometric signals are processed by proprietary models designed to influence facial recognition credit decisions, based on correlations drawn from massive behavioral datasets. The underlying assumption is that emotional responses during applications may predict future repayment behavior.

Technical limitations significantly affect the reliability of facial recognition credit decisions. Poor lighting can cause systems to misread shadows as emotional indicators, while low-quality cameras may miss the subtle details required for accurate emotion analysis. Device positioning also alters how facial features are interpreted, introducing inconsistencies that can shape facial recognition credit decisions independently of a borrower’s true financial responsibility.

Cultural and demographic differences in emotional expression further complicate outcomes. Studies show facial recognition accuracy varies across racial groups, and cultural norms around eye contact, facial expressiveness, and speech patterns differ widely. When these variations are filtered through a single dominant training model, facial recognition credit decisions may unintentionally penalize individuals based on appearance or communication style rather than creditworthiness.

How AI Bias Compounds Existing Credit Report Problems

Existing credit report errors create a foundation upon which AI bias can build and multiply in facial recognition credit decisions. When emotion AI systems encounter applicants with legitimate credit concerns stemming from reporting inaccuracies, the technology may interpret natural anxiety or frustration as indicators of financial irresponsibility. This creates a compounding effect where accurate emotional readings are applied to flawed credit data, resulting in outcomes that appear algorithmically sound but are fundamentally based on incorrect information.

The feedback loop mechanism in AI systems presents a particularly insidious problem for facial recognition credit decisions and credit repair efforts. When biased outcomes generate data points that reinforce algorithmic assumptions, the system becomes increasingly confident in its flawed correlations. An applicant denied partly due to emotional analysis contributes to datasets that may associate certain traits with higher risk, increasing barriers for future applicants.

Medical conditions and temporary life circumstances further complicate facial recognition credit decisions. Neurological conditions affecting facial muscle control, anxiety disorders influencing speech patterns, or temporary stress from unrelated life events can be misinterpreted as signs of deception or instability. Unlike traditional credit factors grounded in long-term financial behavior, these assessments may penalize individuals for factors entirely beyond their financial control.

The digital redlining phenomenon emerges when facial recognition credit decisions inadvertently correlate facial features or emotional expressions with geographic or socioeconomic indicators. Regional accents, facial structures more common in specific ethnic groups, or culturally driven expression styles may become unintended proxies for creditworthiness, creating systemic disparities.

Appealing facial recognition credit decisions is significantly more complex than disputing traditional credit report errors. While consumers can challenge factual inaccuracies, contesting facial recognition credit decisions requires insight into proprietary emotional analysis models that lenders rarely disclose, making it difficult to prove that an algorithm’s interpretation—rather than actual financial risk—drove the outcome.

When Emotion AI Gets It Right for the Wrong Reasons

Statistical accuracy in emotion AI systems can mask problematic processes behind facial recognition credit decisions that rely on biased correlations. A system may correctly predict loan defaults while simultaneously disadvantaging protected groups or individuals with legitimate financial challenges. High prediction accuracy alone does not justify the fairness or validity of the factors driving facial recognition credit decisions.

Cultural differences in emotional expression create unequal outcomes within facial recognition credit decisions. Eye contact norms vary widely across cultures, with some viewing direct gaze as respectful and others as confrontational. Facial expressiveness, vocal tone, and gesture frequency also reflect cultural conditioning rather than financial integrity, yet AI systems may interpret these traits as indicators of trustworthiness or risk within facial recognition credit decisions.

The challenge of distinguishing financial stress from general life stress exposes a core weakness in facial recognition credit decisions. Applicants may display anxiety caused by health issues, family problems, or work pressures that are indistinguishable from credit-related stress. Because emotion AI lacks contextual awareness, it evaluates these emotional signals without understanding their true source.

Genuine concern about one’s financial situation can be misclassified as deception by emotion AI models used in facial recognition credit decisions. Applicants with damaged credit may naturally exhibit stress during applications—not due to dishonesty, but because they recognize their financial vulnerability. This creates a paradox where transparency and awareness become risk factors.

The temporal nature of emotional analysis further complicates facial recognition credit decisions. Emotional states captured during short application windows may be influenced by unrelated, momentary factors such as fatigue, caffeine, or daily stressors. Yet these fleeting signals can become long-term inputs in algorithmic systems that affect access to credit for extended periods.

The Gap Between Traditional Credit Laws and AI Innovation

Current credit reporting regulations, including the Fair Credit Reporting Act (FCRA) and Equal Credit Opportunity Act (ECOA), were designed decades before artificial intelligence became a factor in financial decision-making. These laws focus on the accuracy of factual information and prohibit discrimination based on protected characteristics, but they do not address the collection and use of biometric or emotional data in credit evaluation. The regulatory framework lacks specific provisions for algorithmic transparency, emotional data retention, or the right to understand AI-influenced decisions.

Jurisdictional challenges complicate the regulatory landscape when AI processing occurs across multiple platforms and geographic locations. Credit applications may involve facial recognition analysis performed by third-party vendors, emotion AI processing conducted in different states or countries, and algorithmic scoring systems operated by separate entities. This distributed processing model makes it difficult to determine which regulations apply and which agencies have enforcement authority when AI-driven credit decisions appear unfair or discriminatory.

The emerging legal framework around algorithmic transparency in financial services remains fragmented and incomplete. While some states have enacted biometric privacy laws requiring consent for facial recognition data collection, these regulations often contain exceptions for fraud prevention and risk assessment activities. The definition of what constitutes sufficient disclosure about AI usage varies significantly across jurisdictions, leaving consumers with inconsistent protections depending on their location and the specific lenders they approach.

Data retention policies for biometric information differ substantially from traditional credit data handling requirements. While credit reports are subject to specific time limits and dispute processes, emotional and biometric data collected during applications may be stored indefinitely or shared with third parties under different legal frameworks. The lack of standardized retention periods for AI-generated emotional assessments means that a single application session could influence your credit profile for an undefined period.

Traditional adverse action notices may not adequately explain AI-influenced decisions, leaving consumers without clear understanding of why their applications were denied. Current regulations require lenders to identify the primary factors that influenced credit decisions, but these disclosures were designed for traditional underwriting criteria like payment history and debt-to-income ratios. When emotion AI contributes to credit decisions, the specific emotional markers or behavioral patterns that influenced the outcome may be buried within vague references to “application information” or “risk assessment factors.”

Practical Steps for Navigating AI-Enhanced Credit Evaluation

Environmental and technical considerations for video-based credit applications require strategic preparation to optimize AI assessment outcomes. Proper lighting should illuminate your face evenly without creating harsh shadows that might be misinterpreted as emotional indicators. Position your camera at eye level to maintain natural eye contact patterns and ensure your entire face remains visible throughout the application process. Audio quality significantly impacts voice analysis algorithms, so use a quiet environment with minimal background noise and speak clearly at a consistent volume.

Addressing existing credit report errors becomes critically important before AI systems compound these inaccuracies through emotional analysis. Credit report errors affecting 20% of consumers can trigger legitimate stress responses during applications, which emotion AI may interpret as indicators of financial irresponsibility. Systematically reviewing your credit reports from all three bureaus and disputing any inaccuracies ensures that your emotional responses during applications reflect your actual financial situation rather than frustration with incorrect information.

The following technical considerations can help optimize your presentation during AI-enhanced applications:

- Device positioning: Place cameras at eye level to maintain natural facial angles

- Lighting setup: Use soft, even lighting that eliminates harsh shadows

- Audio environment: Choose quiet spaces with minimal echo or background noise

- Internet connectivity: Ensure stable connections to prevent video quality degradation

- Application timing: Complete applications when you feel calm and focused

- Documentation preparation: Have all required information readily available to minimize stress

Requesting information about AI usage in credit decisions helps you understand how these technologies might affect your applications. While lenders may not disclose specific algorithmic details, asking direct questions about facial recognition, emotion analysis, or biometric data collection can reveal whether these technologies are in use. Document these inquiries and any responses you receive, as this information may prove valuable if you need to dispute decisions or identify patterns of potential bias.

Building resilience against potential AI bias requires diversifying your credit applications across different lenders and platforms to gather data about how various systems assess your profile. Significant variations in approval rates or terms between similar lenders may indicate that AI systems are interpreting your presentation differently. This comparative approach helps you identify whether specific emotional or behavioral factors are consistently influencing your credit decisions across multiple platforms.

Documentation strategies for building a paper trail become essential when AI-influenced decisions seem unfair or inconsistent with your actual financial qualifications. Record the specific date, time, and technical conditions of each application, including device used, lighting conditions, and your general emotional state. Save any communications about AI usage in the underwriting process and maintain copies of all application materials. This documentation provides crucial evidence if you need to challenge decisions or demonstrate patterns of algorithmic bias affecting your credit access.

Conclusion: Preparing for the New Reality of AI-Enhanced Credit Decisions

The integration of facial recognition and emotion AI into credit evaluation represents a fundamental shift in how financial institutions assess risk, one that extends far beyond traditional credit metrics into the realm of human behavior and expression. While these technologies promise faster decisions and potentially more nuanced risk assessment, they also introduce complex biases and barriers that can compound existing credit challenges. The gap between current regulations and AI innovation leaves consumers vulnerable to algorithmic discrimination that’s difficult to identify, challenge, or remedy through established dispute processes.

Your preparation for this new landscape requires both technical awareness and strategic action. Understanding how these systems work, optimizing your presentation during digital applications, and addressing existing credit report errors before they’re amplified by AI bias becomes essential for maintaining fair access to credit. The question isn’t whether you’ll encounter these technologies in your financial journey – it’s whether you’ll be equipped to navigate them effectively. The future of creditworthiness may increasingly depend not just on your financial history, but on how well you can present yourself to algorithms that are still learning what human trustworthiness actually looks like.